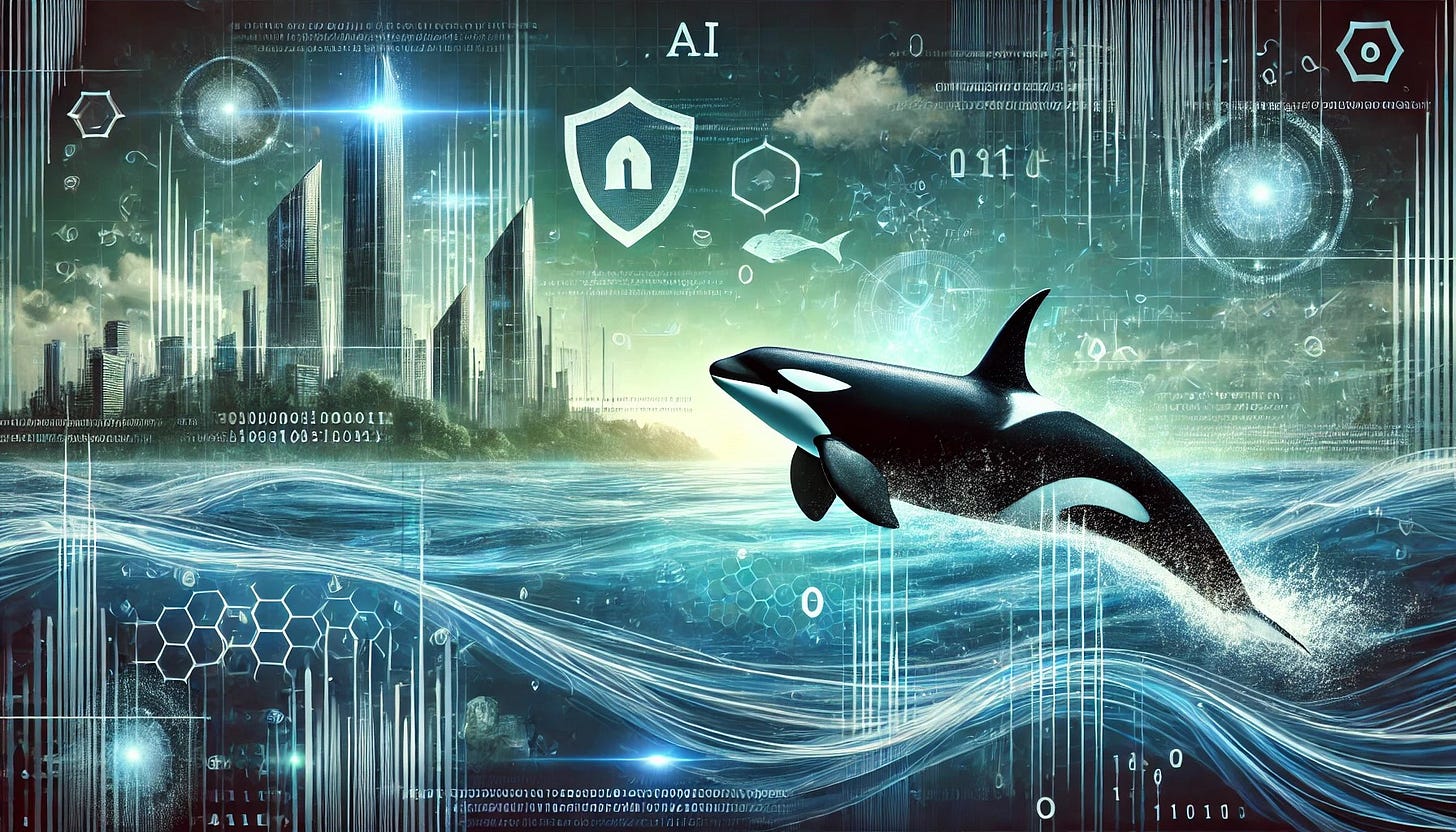

Generative AI now features in the production environments of several large organizations, yet very little research has been done surrounding its security. Orca Security seeks to change this with their “2024 - State of AI Security Report”.

In this post, I will summarize the report’s key findings, analyze their relevance, and consider the future of AI Security.

Contents

Key Findings

AI Usage

Vulnerabilities in AI packages

Exposed AI models

Insecure access

Misconfigurations

Encryption

Final Thoughts - The Future

Key Findings

Orca put forward 3 key findings in the executive summary of their report. Let’s take a look at each one:

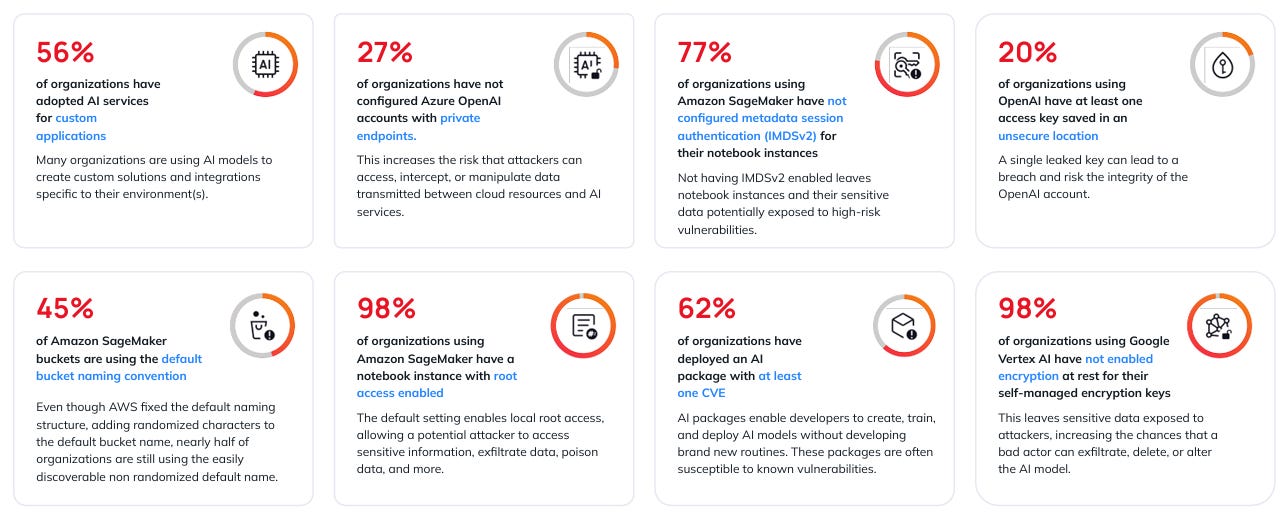

More than half of organizations are deploying their own AI models - This is not a groundbreaking finding and I expect the percentage to be higher. Confirming the figure highlights the relevance of AI Security

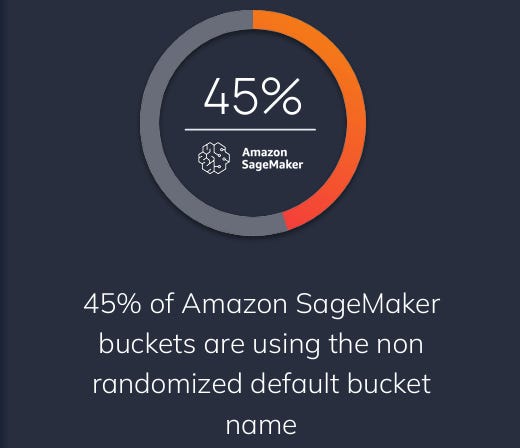

Default AI settings are often accepted without regard for security - Orca gives several examples to back this up, such as 45% of Amazon SageMaker buckets using non-randomized default bucket names. If a future vulnerability is found in a default setting, this may open up an attack vector into several organizations

Most vulnerabilities in AI models are low to medium risk - 62% of organizations have deployed an AI package with at least one CVE, associated with an average CVSS score of 6.9. While this sounds bad, only 0.2% of these vulnerabilities have a public exploit.

AI Usage

Orca published some fascinating graphics in their report, giving us an insight into what organizations use.

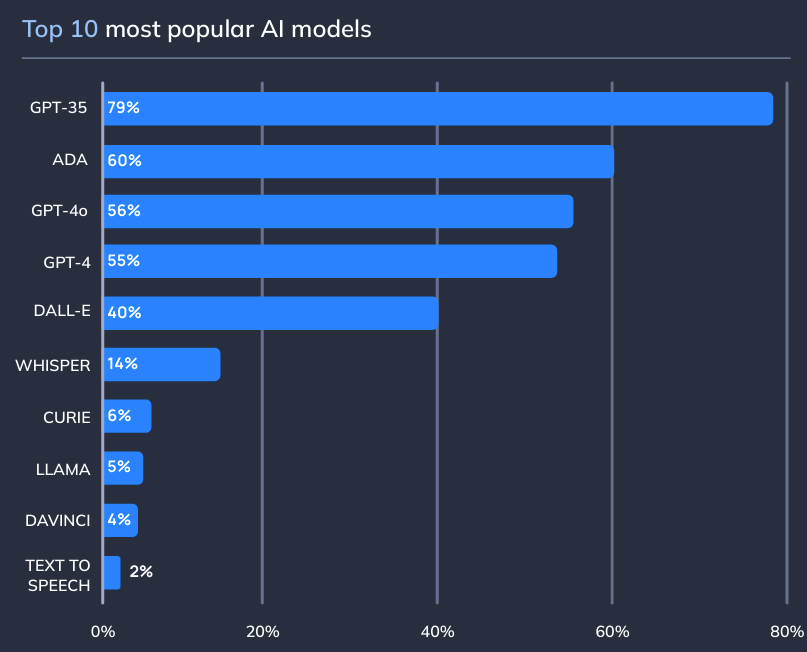

Usage by AI model

OpenAI’s models overwhelmingly dominate the market; Llama only sees 5% usage compared to GPT-3.5’s 79%. While I expected OpenAI to lead, I did not expect them to lead by this much.

If OpenAI is compromised in the future, attackers will potentially be able to infiltrate several organizations by virtue of the ubiquity of its models.

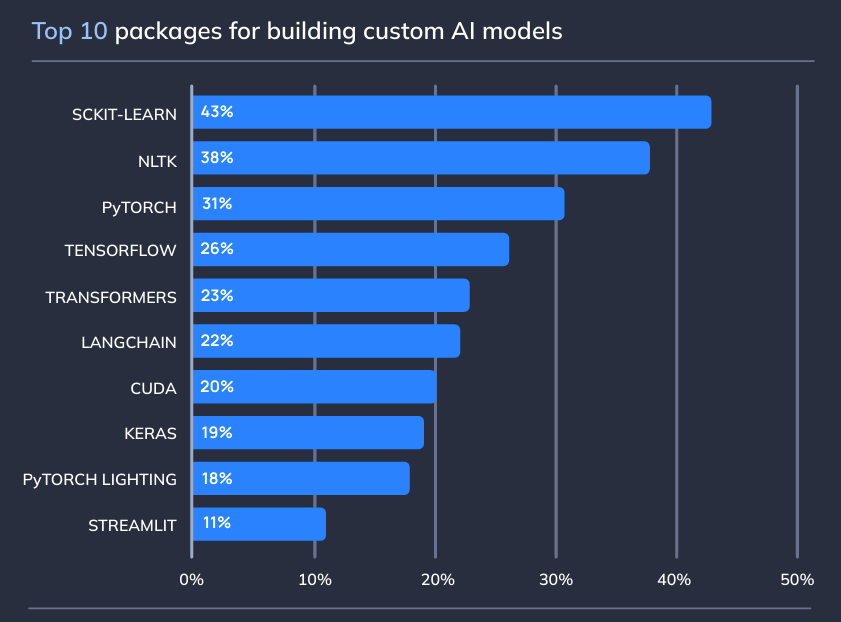

Usage by AI package

This graphic shows a far more balanced distribution in terms of packages used to build AI models. PyTorch still has 31% usage; this is concerning because PyTorch uses pickle files, which can lead to remote code execution if they are deserialized insecurely.

Read my article below to find out how Hugging Face was compromised in this manner!

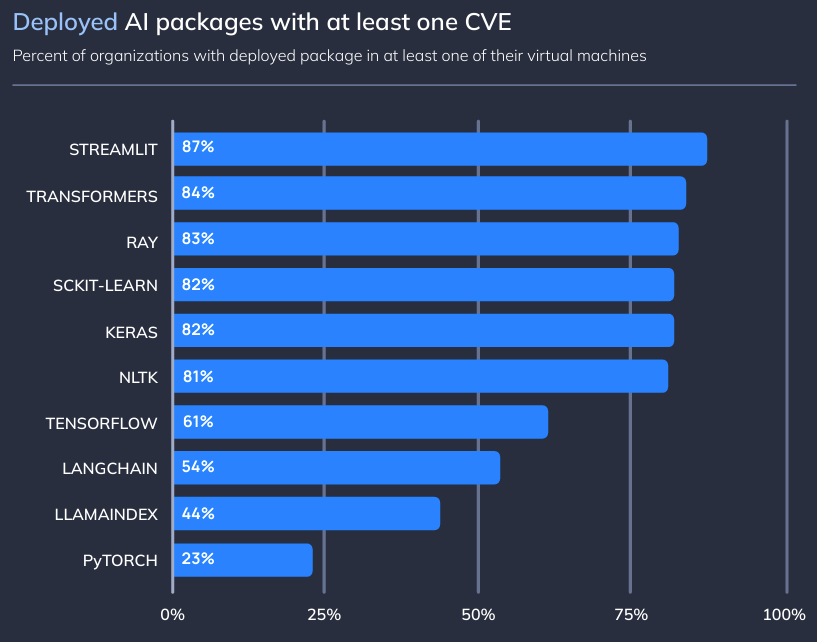

Vulnerabilities in AI packages

This graph seems more alarming than it actually is. While a large proportion of AI packages contain at least one CVE, many of these are theoretical and not exploitable in the wild. Orca notes that medium-risk CVEs can still constitute a critical risk if they are chained with other vulnerabilities in a target environment. This is a valid point and applies to all branches of technology, not just AI.

Exposed AI models

Orca cites a recent case study by Aqua Security. When a user creates an Amazon Sagemaker Canvas, the service automatically creates an S3 bucket with a default naming convention:

sagemaker-[Region]-[Account-ID]

If the bucket is public, attackers only need to know the region and account id of the Sagemaker Canvas instance to be able to view its contents. Since the research was released, Amazon have begun adding a randomized number to new default Sagemaker buckets - however, 45% of buckets still have the old, guessable name.

This case study highlights a lack of awareness around AI Security, causing many organizations to still have vulnerable AI infrastructure in their environments.

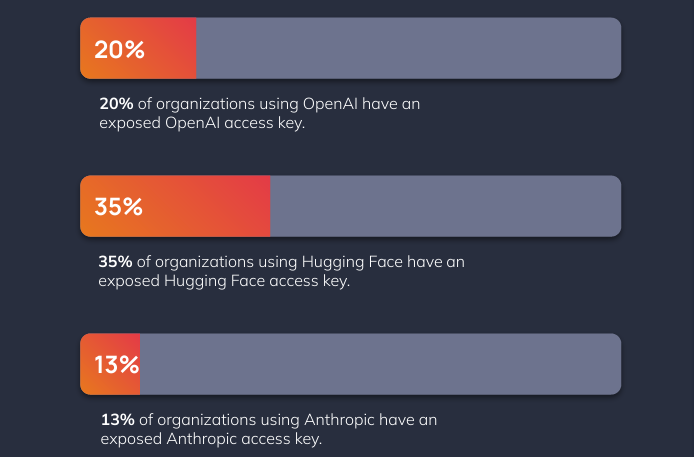

Insecure access

This is a classic issue - developers committing API keys into publicly accessible codebases. Given we are in 2024, this is a surprisingly high percentage of organizations! Attackers can exploit these keys to perform account theft, data theft, and other attack techniques.

Misconfigurations

Because Generative AI is such a new field, security misconfigurations are incredibly common - in fact, all risks in the OWASP Machine Learning Security Top Ten apply to misconfigurations.

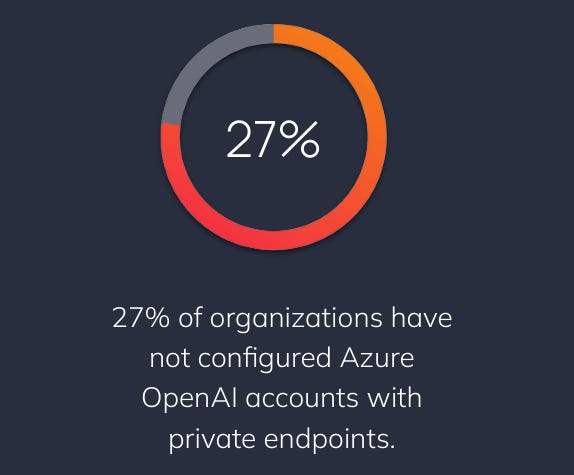

Orca Security found that 27% of organizations have not configured Azure OpenAI accounts with private endpoints. While this arguably isn’t a misconfiguration, it increases the attack surface of an organization which could lead to exploits such as data theft.

Encryption

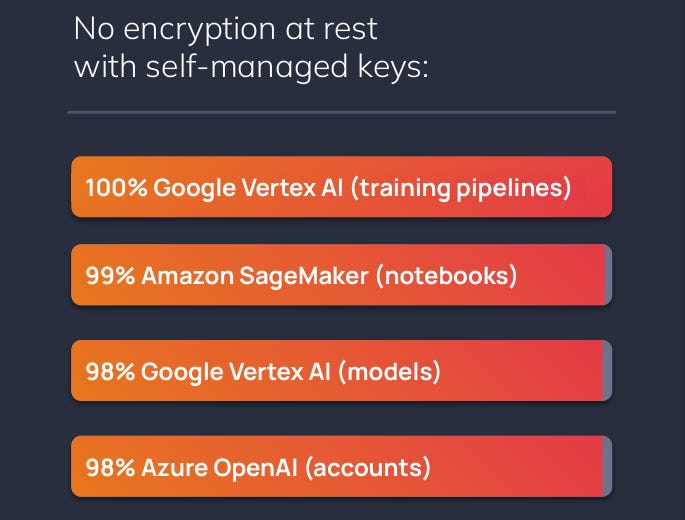

The last section of the report highlights how next to no organizations are encrypting AI data with self-managed keys. The figure seems alarming, but Orca was not able to gather data on whether these organizations are using other keys for the encryption. This makes it difficult to draw any solid analytical inferences.

Final Thoughts - The Future

Overall, Orca’s report is insightful and draws several valuable conclusions on the state of AI Security in 2024. The main underlying theme I take from it is that organizations are rapidly integrating AI solutions into their infrastructure, yet don’t fully understand the security risks.

We are yet to see a high-severity vulnerability in an AI product being exploited by attackers in the wild. Based on this report, it seems like several organizations will be simultaneously affected in such a scenario, causing devastating impacts and lessons learned for the future.

Check out my article below to learn more about Apple Intelligence Jailbreaks. Thanks for reading.